In this article, I demonstrate how the text moderation feature from Azure AI Content Safety service can be used to filter text on a string property, using a blocklist to identify harmful or unwanted content. This is done as part of the content publishing process within Optimizely CMS.

Text moderation on a string property using a blocklist, can be achieved by downloading the “Patel.AzureAIContentSafety.Optimizely” NuGet package. You can get this package from the Optimizely NuGet Feed or the NuGet Feed.

After the NuGet Package has been downloaded and the initial Configuration/Setup steps have been completed, it is necessary to add a boolean property with the [TextAnalysisBlocklistAllowed] attribute to the Start Page type in Optimizely. This action is essential to activate the designated functionality. More details about this can be found here.

Subsequently, two string properties need to be added to an Optimizely CMS Content type that inherits from IContent.

The first string property requires the addition of a [TextAnalysisBlocklistDropdown] attribute, the procedure for this is shown here. The second string property requires a [TextAnalysisBlocklist] attribute, with more information provided here.

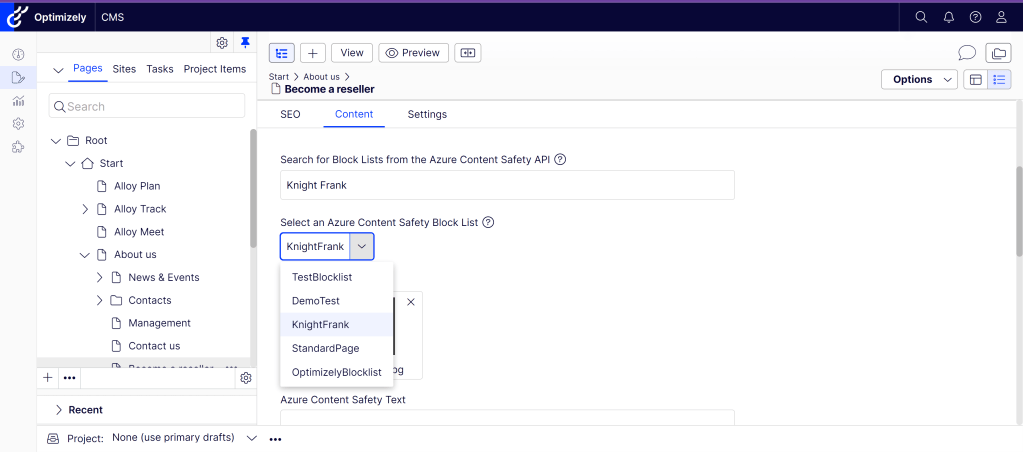

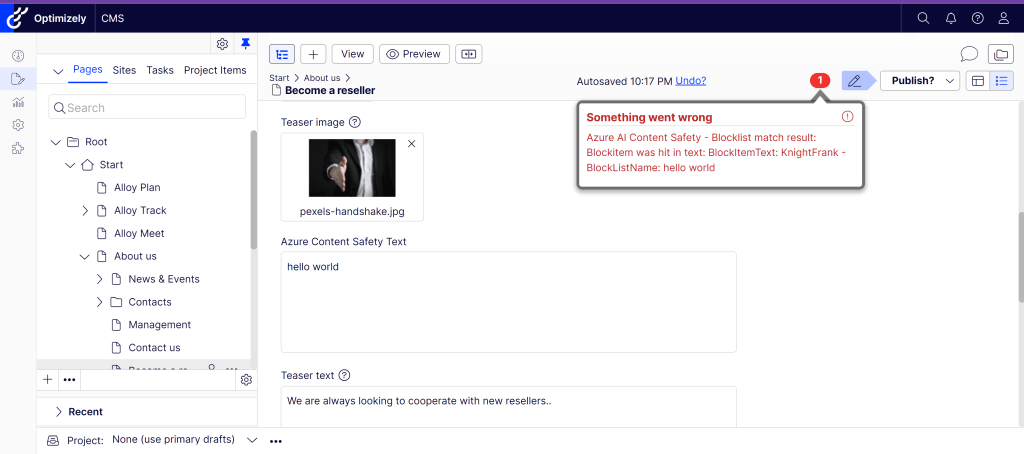

When these properties are added, a blocklist can be chosen from the dropdown and content can be populated in the string property. The screenshot below shows an example of this being done.

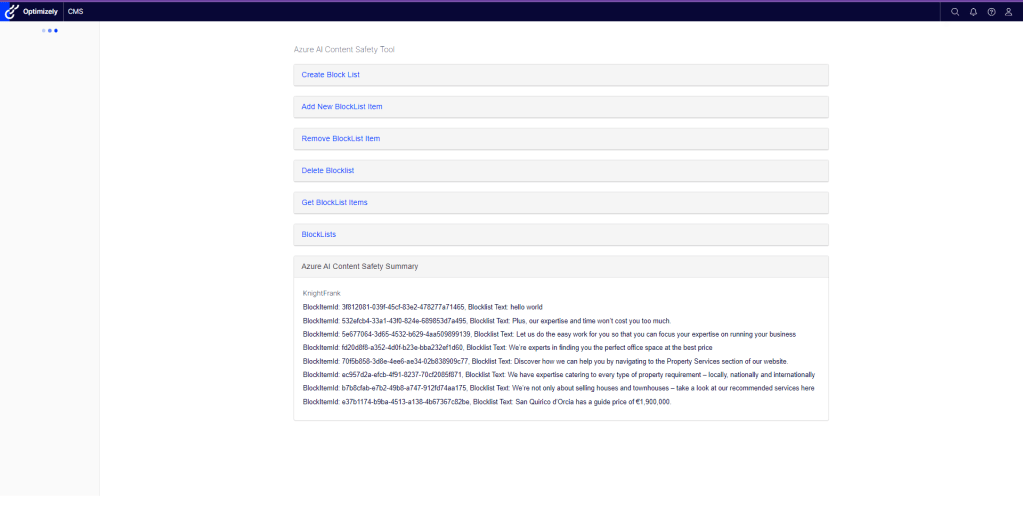

The items linked to the blocklist are shown below in the screenshot. You can find this information in the Blocklist Management Add-On, which is part of the NuGet Package “Patel.AzureAIContentSafety.Optimizely”. For more details, you can visit this link.

When the steps above are complete, the content will be ready for publishing and text moderation, using the selected blocklist.

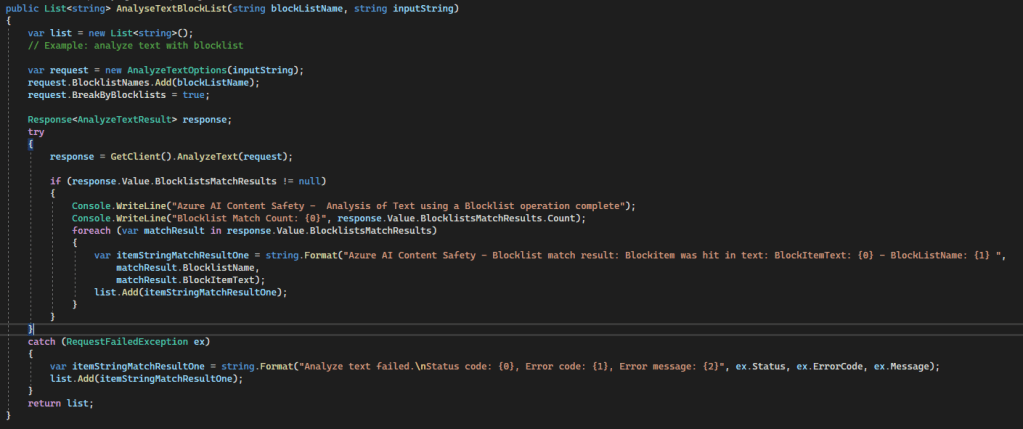

The image below illustrates the code used for analysing text with a blocklist using the Azure AI Content Safety API.

Response from the API via the Console

Azure AI Content Safety – Analysis of Text using a Blocklist operation complete

Blocklist Match Count: 1

Once the text moderation is done, if any published text matches any items in the chosen blocklist, an error message will appear in the CMS. This will prevent the user from publishing the content as shown in the screenshot below.

If the text being published doesn’t match any blocklist items associated with the chosen blocklist, then the content will be published and available in the CMS.